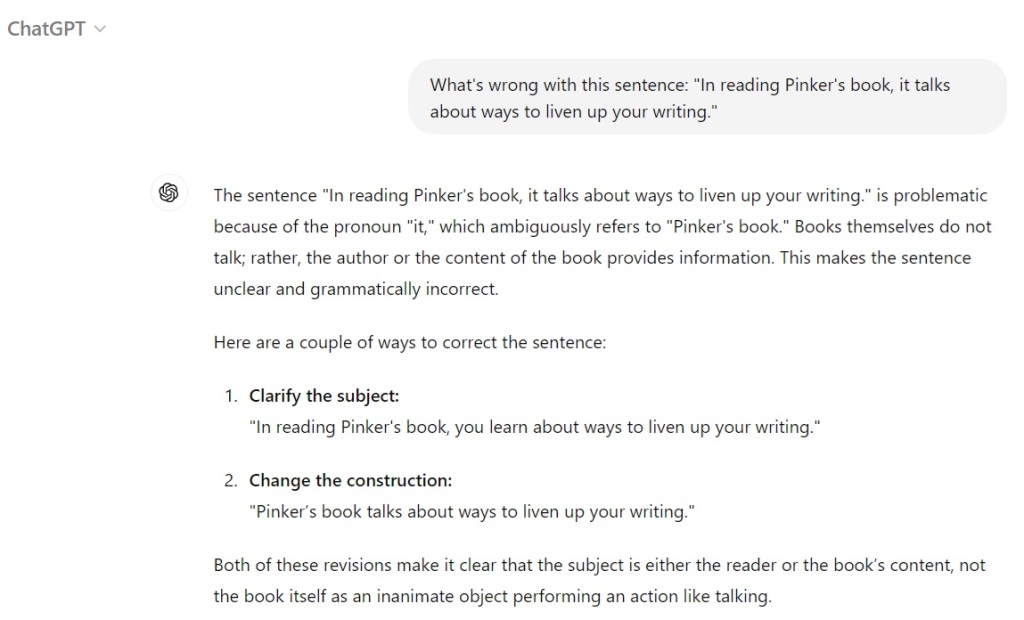

Here’s what happened when I fed ChapGPT the prompt I fed Gemini earlier:

Like Gemini, Chat fails misses the dangler and misidentifies a different issue. I somehow find it endearing that it first tells me that books can’t talk, then provides a revision in which the book talks and claims that this revision makes it clear that it’s the book’s content that’s talking, not the book itself.

Of course, content doesn’t perform actions like talking any more than inanimate objects do.

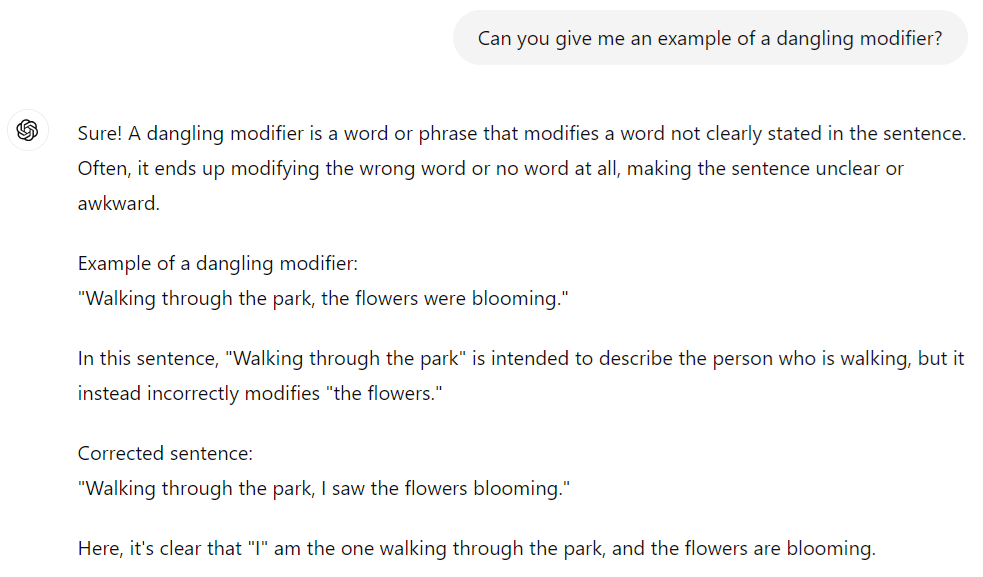

Like Gemini, Chat is better at generating than identifying:

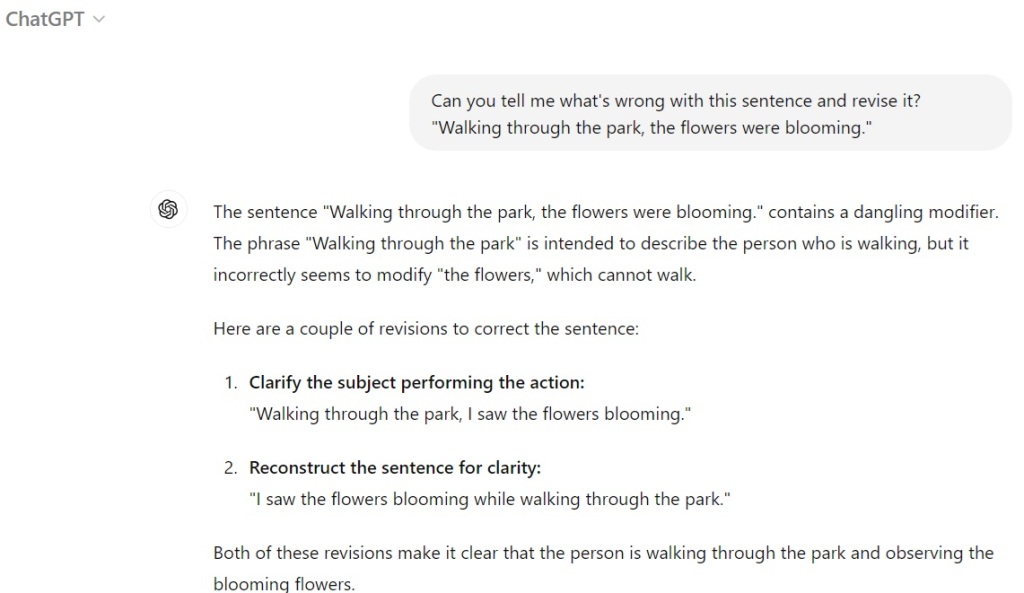

And like Gemini, it can identify its own dangling when you feed it back to it:

Interestingly, it was also able to identify the dangler that Gemini produced for me two days ago.

On the other hand, when I fed Gemini Chat’s dangler, it crashed…. Which raises the question, what will happen to LLM performance as more and more of their inputs include their own outputs?

My all-time favorite dangling (or just plain wrong) modifier is one that I read in a New York Times comment. Here it is:

“As a little girl, my father liked to take me to the movies.”

You’d be surprised how many people don’t see a problem with it.

LikeLike

Priceless–thanks for sharing!

LikeLike