I spent several years of my life fending off injunctions re critical thinking, a phrase that now seems to have been displaced by thinking classrooms.

(What is a thinking classroom, you ask? I’m going to make a wild guess and say group projects. . . . Yup. Group projects, only standing up. Called it.)

Not that I’m against thinking. Hardly. But critical thinking proponents always assumed we all knew what critical thinking was. Or, more accurately, they assumed we all agreed with their unspoken beliefs about what it was.

A couple of weeks ago I came across a person — a philosopher of education, Robert H. Ennis — who’s spent decades attempting to define the term. Critical thinking, per Ennis, is “reasonable, reflective thinking that is focused on deciding what to believe or do.”

Hmmm. “Deciding what to believe or do” makes sense to me as a definition of the goal of thinking “critically.” But “reasonable and reflective” doesn’t really tell me how to distinguish between critical thinking and not-critical thinking.

Setting that aside, professors Gary Smith and Jeffrey Funk, writing in The Chronicle of Higher Education, tell us that, for Ennis, reasonable reflective thinking has eleven characteristics:

- Being open-minded and mindful of alternatives

- Trying to be well-informed

- Judging well the credibility of sources

- Identifying conclusions, reason, and assumptions

- Judging well the quality of an argument, including the acceptability of its reasons assumptions, and evidence

- Developing and defending a reasonable position

- Asking appropriate clarifying questions

- Formulating plausible hypotheses; planning experiments well

- Defining terms in a way that’s appropriate for the context

- Drawing conclusions when warranted, but with caution

- Integrating all items in this list when deciding what to believe or do

source: When It Comes to Critical Thinking, AI Flunks the Test by Gary Smith and Jeffrey Funk. 3/12/2024 Chronicle of Higher Education

Smith and Funk’s point: all of these are human activities.

Can’t be performed by LLMs.

to be continued

And see:

What is critical thinking, apart from something AI can’t do?

Overpromising, under-delivering

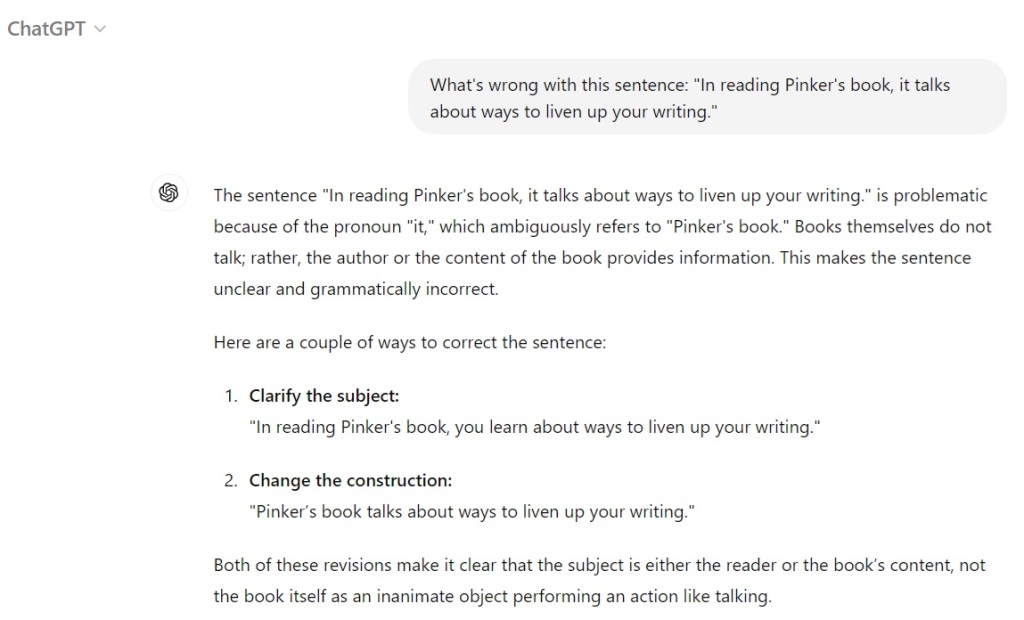

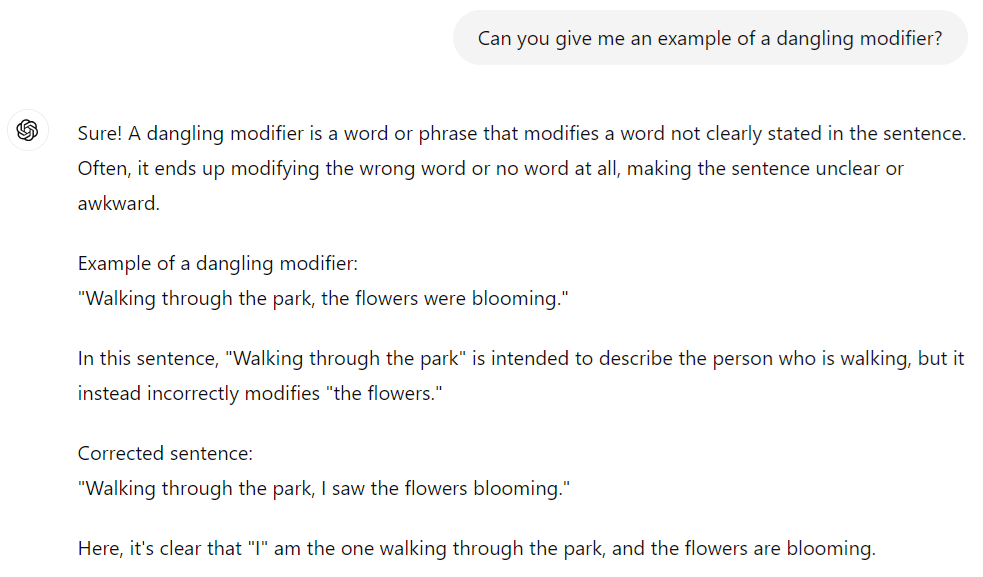

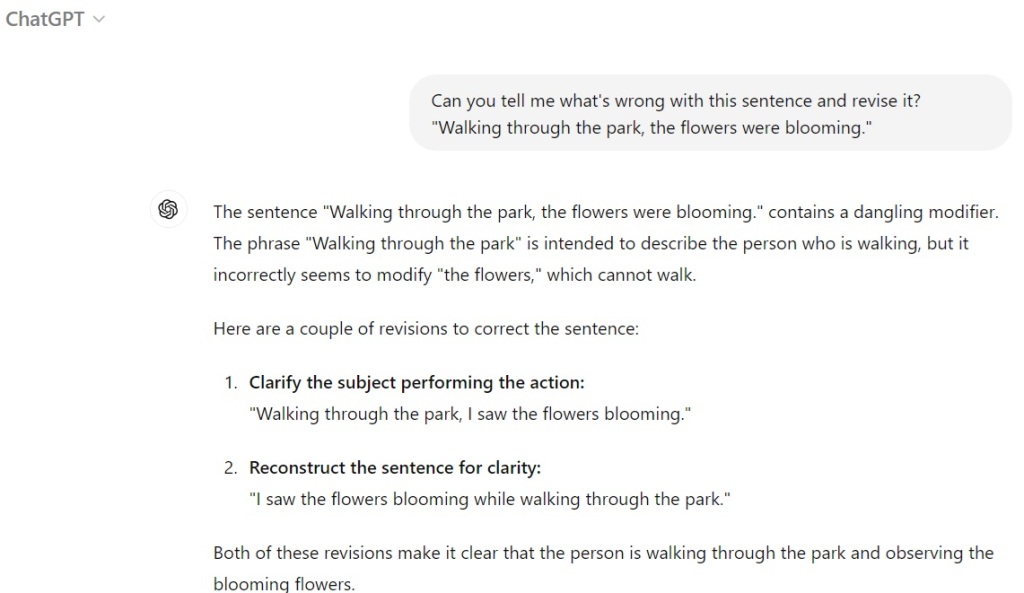

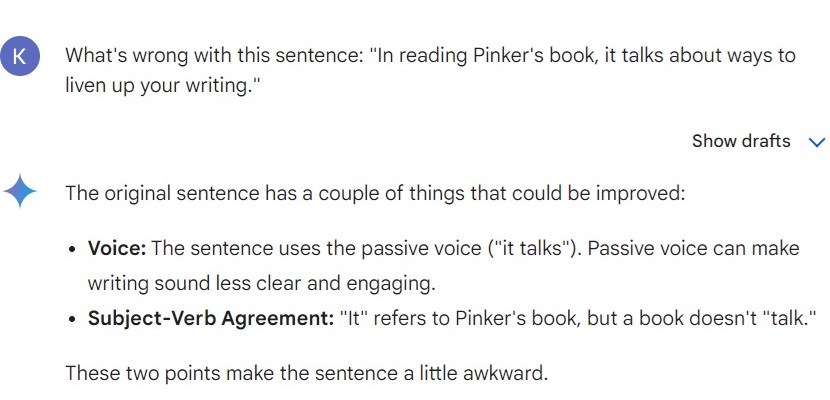

AI-proofing an exam question

Artificial intelligence: other posts